Using GPUs¶

The ddg and sbg nodes contain GPU cards that can provide huge acceleration for certain types of parallel computing tasks, via the CUDA and OpenCL frameworks.

Access to GPU nodes

Access to GPU nodes is available for free to QM Researchers. Please test your job on the short queue and check that the GPU is being used, then contact us to request addition to the allowed user list in order to use the GPU nodes fully. Note that access to GPU nodes is not permitted for Undergraduate and MSc students, or external users.

Short queue GPU testing

QM Researchers should test their GPU code on the 1-hour short queue before

requesting full GPU node access for the first time. Please provide

supporting evidence of a working GPU job (a job id, or screenshot of

nvidia-smi output) when requesting node access - see below for more

information and examples.

Applications with GPU support¶

There are a

considerable number of

scientific and analytical applications with GPU support. While some have GPU

support out of the box, such as Matlab and

Ansys, others may require specific GPU-ready

builds. These may appear in the module avail list with a -gpu suffix. If

you require GPU support adding to a specific application, please

submit a request for a GPU build and provide some test

data.

Be aware that not every GPU-capable application will run faster on a GPU. For example, an application may only have specific subroutines that offer GPU acceleration - code that is not GPU aware will instead run on the CPU.

Submitting jobs to GPU nodes¶

To request a GPU, the -l gpu=<count> option should be used in your job

submission. Jobs must request 8 cores per GPU (-pe smp 8) and 11GB RAM per

core (-l h_vmem=11G) to ensure the number of cores and RAM requested is

proportionate to the number of GPUs requested

(please see the examples below).

The scheduler will automatically select a GPU node based on availability and

other resource parameters, if specified.

For users with access to nodes that have 48 cores or more, you may request 12

cores per GPU to better utilise the available resources. To request 12 cores

per GPU, please change your RAM request to -l h_vmem=7.5G (eligible GPU high

memory jobs may request up to 20G RAM per core with 12 core jobs) and ensure

your job includes one of the following parameters:

-l cluster=andrena(Andrena cluster users only)-l node_type=Xwhere X is one of:ddg,rdgorxdg. (Note: RDG and XDG nodes are available only to users from the Science and Engineering faculty.)-l gpuhighmem(For Science and Engineering faculty users only)

Failure to add one of these parameters will result in the job being rejected.

We have also enabled the short queue on all GPU node types which may be used before acquiring access to run longer GPU jobs. Jobs submitted to the short queue have a higher priority than main queue jobs and will run as soon as the node becomes free and no other jobs are running in the main queue.

Selecting a specific GPU type¶

For compatibility, you may optionally require a specific GPU type. Nodes with

the Volta V100 GPU may be selected with -l gpu_type=volta, and

Ampere A100 nodes may be selected with -l gpu_type=ampere.

GPU card allocation¶

Do not set the CUDA_VISIBLE_DEVICES variable

For reasons documented below, please do not set the CUDA_VISIBLE_DEVICES

variable in your job scripts.

We have enabled GPU device cgroups (Linux Control Groups) across all GPU nodes on Apocrita, which means your job will only be presented the GPU cards which have been allocated by the scheduler, to prevent some applications from attaching to GPUs which have not been allocated to the job.

Previously, it was required to set the CUDA_VISIBLE_DEVICES variable in job

scripts to ensure the correct GPU is used in the job. However, this was a

workaround until the GPU device cgroups were applied. You should no longer

set this variable in your job scripts.

Inside your job, the GPU cards presented to your job will always appear as device 0 to device N, depending on how many GPU cards you have requested. Below demonstrates the devices presented to jobs, per GPU resources request:

| GPUs Requested | Devices Presented |

|---|---|

| 1 | 0 |

| 2 | 0, 1 |

| 3 | 0 - 2 |

| 4 | 0 - 3 |

Checking GPU usage¶

Checking GPU usage with nvidia-smi¶

GPU usage can be checked with the nvidia-smi command e.g.:

$ nvidia-smi -l 1

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-PCIE... On | 00000000:06:00.0 Off | 0 |

| N/A 70C P0 223W / 250W | 12921MiB / 16160MiB | 97% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-PCIE... On | 00000000:2F:00.0 Off | 0 |

| N/A 30C P0 23W / 250W | 4MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Tesla V100-PCIE... On | 00000000:86:00.0 Off | 0 |

| N/A 30C P0 23W / 250W | 6MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Tesla V100-PCIE... On | 00000000:D8:00.0 Off | 0 |

| N/A 31C P0 23W / 250W | 6MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1557 C python 12915MiB |

+-----------------------------------------------------------------------------+

In this example we can see that the process is using GPU 0. We use the -l 1

option which tells nvidia-smi to repeatedly output the status. Should this

be run inside a job, GPU 0 would be the card you have been allocated, which

might not be system device 0.

If you SSH into a GPU node and run nvidia-smi, you will see all system

GPU devices by their real ID, rather than the allocated device number.

Similarly, the SGE_HGR_gpu environment variable inside jobs and the

qstat -j JOB_ID command will also show the actual GPU device granted.

Checking GPU usage with gpu-usage¶

Another tool to check your GPU job is gpu-usage:

$ gpu-usage

--------------------

Hostname: sbg2

Fri 17 Jun 14:36:03 BST 2022

Free GPU: 2 of 4

--------------------

GPU0: User: abc123 Process: gmx Utilization: 53%

GPU1: User: bcd234 Process: python Utilization: 78%

GPU2: Not in use.

GPU3: Not in use.

User: abc123 JobID: 2345677 GPU Allocation: 1 Queue: all.q

User: bcd234 JobID: 2345678 GPU Allocation: 1 Queue: all.q

User: cde345 JobID: 2345679 GPU Allocation: 1 Queue: all.q

Warning! GPUs requested but not used!

In this example, we can see that two jobs (2345677 and 2345678) are correctly

using the GPUs. The third job (2345679) from user cde345 has requested a

GPU but is not using it.

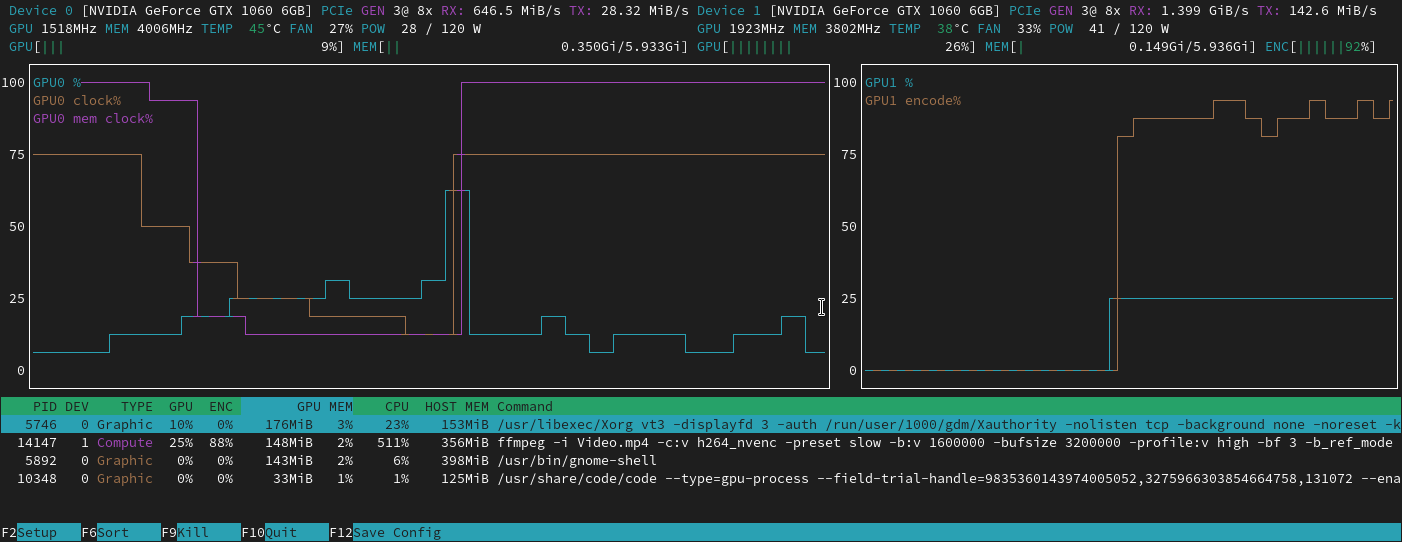

Checking GPU usage with nvtop and nvitop¶

Two other tools to check your GPU job are nvtop and nvitop. They are both

available in a module called nvtools:

module load nvtools

To run nvtop:

nvtop

nvtop provides a colourised view of GPU activity on a node, in a similar

layout to the popular htop system monitor. More information can be found in

its

documentation.

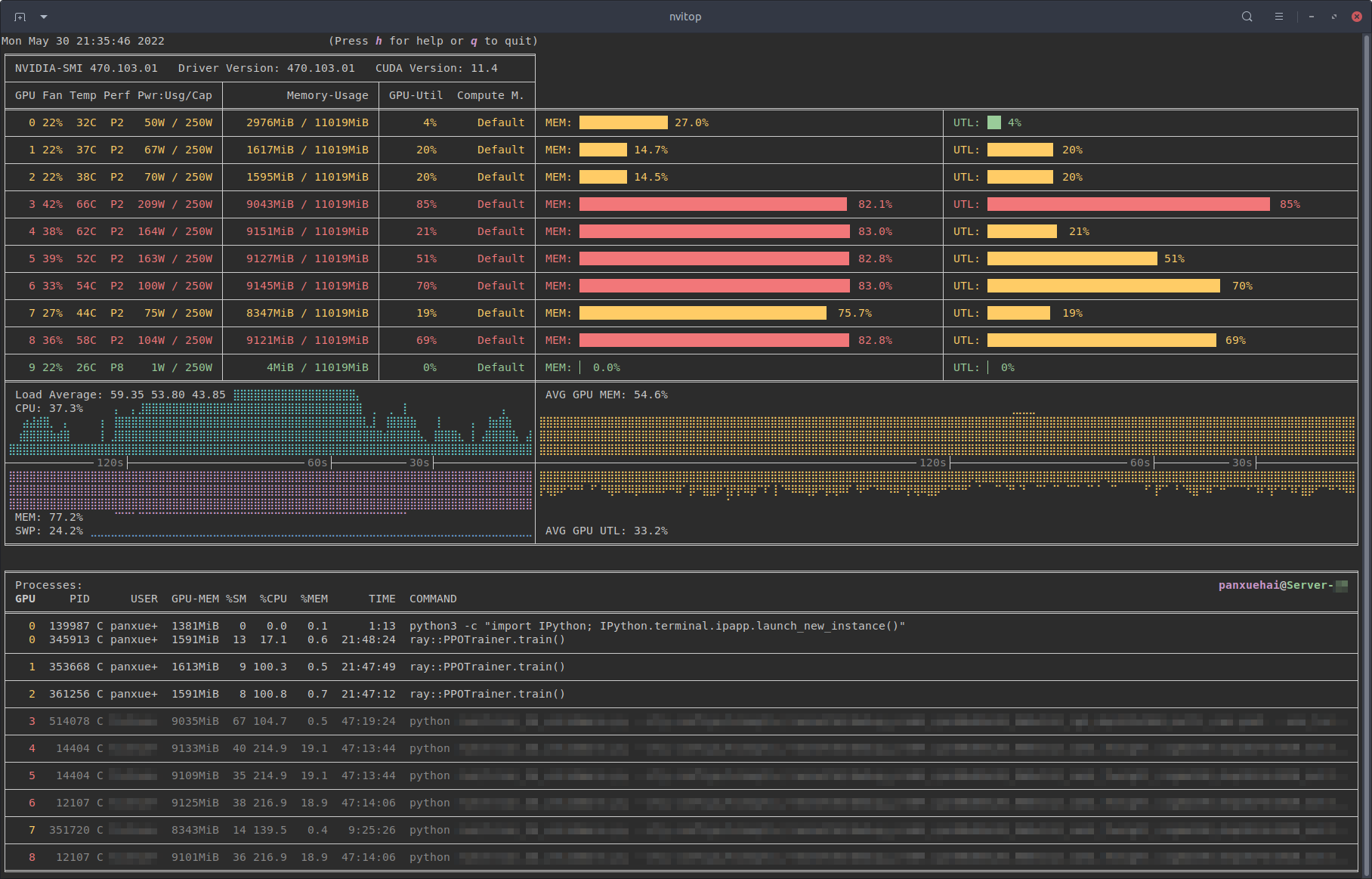

To run nvitop:

nvitop

nvitop also provides a colourised view of GPU activity on a node, with a

slightly alternative layout compared with nvtop. Press h for help or q to

return to the terminal. nvitop has a lot

of powerful options which can be explored in its

documentation.

Example job submissions¶

The following examples show the basic outline of job scripts for GPU nodes. Whilst the general rule for compute nodes is to strictly request only the cores and RAM you will be using, our GPU jobs are GPU-centric, so requests should focus around the number of GPUs required.

For simplicity, GPU jobs must request 8 cores per GPU, and 11GB RAM per core, as shown in our examples below and other documentation pages (for example TensorFlow). Whilst it is possible for some users to request 12 cores per GPU by requesting extra resources, we have not provided a generic example for that here. Please refer to other documentation pages on this site for more information.

h_vmem does not need to account for GPU RAM

The h_vmem request only refers to the system RAM, and does not need to

account for GPU RAM used. The full GPU RAM is automatically granted when

you request a GPU

requesting exclusive access

Requesting exclusive access on the GPU nodes will block other GPU jobs from starting. Please only request exclusive access if also requesting the maximum number of GPUs available in a single GPU node and your code supports multiple GPUs.

Short Queue Testing¶

Request one short queue GPU¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 8 # 8 cores (8 cores per GPU)

#$ -l h_rt=1:0:0 # 1 hour runtime (required to run on the short queue)

#$ -l h_vmem=11G # 11 * 8 = 88G total RAM

#$ -l gpu=1 # request 1 GPU

./run_code.sh

Request two short queue GPUs¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 16 # 16 cores (8 cores per GPU)

#$ -l h_rt=1:0:0 # 1 hour runtime (required to run on the short queue)

#$ -l h_vmem=11G # 11 * 16 = 176G total RAM

#$ -l gpu=2 # request 2 GPUs

./run_code.sh

Request four short queue GPUs (whole node)¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 32 # 32 cores (8 cores per GPU)

#$ -l h_rt=1:0:0 # 1 hour runtime (required to run on the short queue)

#$ -l gpu=4 # request 4 GPUs

#$ -l exclusive # request exclusive access

./run_code.sh

Production GPU Nodes¶

Request one GPU¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 8 # 8 cores (8 cores per GPU)

#$ -l h_rt=240:0:0 # 240 hours runtime

#$ -l h_vmem=11G # 11 * 8 = 88G total RAM

#$ -l gpu=1 # request 1 GPU

./run_code.sh

If requesting exclusive access on a node with 1 GPU card installed

(DDG nodes), please also specify the node type with the

#$ -l node_type=ddg parameter to avoid requesting exclusive access on a

node with 4 GPU cards, otherwise your job may queue for a very long time.

Request two GPUs¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 16 # 16 cores (8 cores per GPU)

#$ -l h_rt=240:0:0 # 240 hours runtime

#$ -l h_vmem=11G # 11 * 16 = 176G total RAM

#$ -l gpu=2 # request 2 GPUs

./run_code.sh

Request four GPUs (whole node)¶

#!/bin/bash

#$ -cwd

#$ -j y

#$ -pe smp 32 # 32 cores (8 cores per GPU)

#$ -l h_rt=240:0:0 # 240 hours runtime

#$ -l gpu=4 # request 4 GPUs

#$ -l exclusive # request exclusive access

./run_code.sh

If you are requesting all GPUs on a node, then choosing exclusive mode will give you access to all of the resources. Note that requesting a whole node will likely result in a long queueing time, unless you have access to an "owned" GPU node that your research group has purchased.

Submitting jobs to an owned node¶

If your research group has purchased a GPU node, the scheduler default action will be to firstly check for available slots on owned node(s), and then the public GPU nodes (if applicable). If you want to restrict your job to your owned nodes only (e.g. for performance, or to ensure consistency), then adding:

#$ -l owned

to the resource request section at the top of your job script will restrict the job to running on owned nodes only.

Getting help¶

If you are unsure about how to configure your GPU jobs, please contact us for assistance.